WebPerfWG F2F summary - June 2019

Last month the Web Performance WG had a face to face meeting (or F2F, for short), and I wanted to write down something about it ever since. Now I’m stuck on an airplane, so here goes! :)

The F2F was extremely fun and productive. While most of the usual suspects group’s regular attendees were there, we also had a lot of industry folks that recently joined, which made the event extremely valuable. Nothing like browser and industry folks interaction to get a better understanding of each other’s worlds and constraints.

.

If you’re curious, detailed minutes as well as the video from the meeting are available. But for the sake of those of you who don’t have over 6.5 hours to kill, here’s a summary!

# Highlights

- ~31 attendees (physical and remote) from all major browser vendors, but also from analytics providers (SpeedCurve and Akamai), content platforms (Salesforce, Shopify), large web properties (Facebook, Wikipedia, Microsoft Excel), as well as ad networks (Google Ads and Microsoft News).

- We spent the morning with a series of 7 presentations from industry folks, getting an overview of how they are using the various APIs that the group has developed, what works well, and most importantly, where the gaps are.

- Then we spent the afternoon diving into those gaps, and worked together to better define the problem space and how we’d go about to tackle it. As part of that we talked about

- Memory reporting APIs

- Scheduling APIs

- CPU reporting

- Single page apps metrics

# Industry sessions

We heard presentations from Microsoft Excel, Akamai, Wikimedia, Salesforce, Shopify, Microsoft News and Google Ads. My main takeaways from these sessions were:

# High-level themes

- Customers of analytics vendors are not performance experts, so automatic solutions that require no extra work from them are likely to get significantly more adoption.

- Measuring performance entries using PerformanceObserver currently requires developers to run their scripts early, or deal with missing entries. Buffering performance entries by default will help avoid that anti-pattern.

- When debugging performance issues only seen in the wild based on RUM data, better attribution can help significantly. We need to improve the current state on that front.

- Image compression and asset management is hard.

- Client Hints will help

- Image related Feature Policy instructions can also provide early development-time warnings for oversized images.

- Origin Trials are great for web properties, but CMSes and analytics vendors cannot really opt into them on behalf of their customers. Those systems don’t always control the site’s headers, and beyond that, managing those trials adds a lot of complexity.

# Missing APIs

# Single page apps measurement

- Single Page apps don’t currently have any standard methods to measure their performance. It became clear that the group needs to build standards methods to address that. See related discussion.

# Runtime performance measurement

- Frame timing

- Sites want to keep track of long frames that reflect performance issues in the wild. Currently there’s no performance-friendly to do that, so they end up (ab)using rAF for that purpose, draining users batteries.

- More metrics regarding the main thread and what is keeping it busy

# Scheduling

- Rendering signal to tell the browser that it should stop focusing on processing DOM nodes and render what it has.

- Isolation of same-origin iframes, to prevent main-thread interference between them and the main content

- Lazy loading JS components can often mean that other page components will hog the main thread. Better scheduling APIs and

isInputPending/isPaintPendingAPIs can help with that. - There are inherent trade-offs between the user’s need to see the content, the advertiser’s needs to capture user’s attention and the publisher’s needs to make money. Having some explicit signals from the publisher on the preferred trade-off can enable the browser to better align with the publisher’s needs. (but can also result in worse user experience)

# Backend performance measurement

- Different CMSes suffer from similar issues around app and theme profiling. Would be good to define a common convention for that. Server-Timing can help.

# Device information

- CPU reporting as well as “browser health”, which came back as a recurrent theme, so we decided to dedicate an afternoon session to hashing out that problem. See discussion below.

# Memory reporting

- Memory leak detection would be really helpful. We discussed this one later as well.

# Gaps in current APIs

- Long Tasks’ lack of attribution is a major reason why people haven’t adopted the API

- Resource Timing needs some long-awaited improvements: better initiator, non-200 responses, insight into in-flight requests, “fetched from cache” indication, and resource processing time.

- Making metrics from same-origin iframes available to the parent would simplify analytics libraries.

- Current API implementations suffer from bugs which make it hard to tell signal from noise. Implementations need to do better.

# Deep Dives

# JS Memory API

Ulan Degenbaev from Google’s Chrome team presented his work on a Javascript heap memory accounting API. The main use-case he’s trying to tackle is one of regression detection - getting memory accounting for their apps will enable developers to see when things changed significantly, and catch memory regressions quickly.

This is not the first proposal on that front - but previous proposals to tackle memory reporting have ran into trouble when it comes to security. This proposal internalized that lesson and comes with significant cross-origin protections from the start.

After his presentation, a discussion ensued about the security characteristics of the proposal and reporting of various scenarios (e.g. where should objects created in one iframe and moved to another be reported?)

Afterwards the discussion drifted towards reporting of live memory vs. GCed memory, and trying to figure out if there’s a way to report GCed memory (which is a much cleaner signal for memory regression detection), without actually exposing GC times, which can pose a security risk.

Then we discussed the Memory Pressure API - another older proposal that was never implemented, but that now sees renewed interest from multiple browser vendors.

# Scheduling APIs

Scott Haseley from the Chrome team talked about his work on main thread scheduling APIs, in order to help developers break-up long tasks. Many frameworks have their own scheduler, but without an underlying platform primitive, they cannot coordinate tasks between themselves and between them and the browser. Notifying the browser of the various tasks and their priority will help bridge that gap. The presentation was followed by a discussion on cases where this would be useful (e.g. rendering/animating while waiting for user input, cross-framework collaboration), and whether priority inversion should necessarily be resolved by the initial design.

# CPU reporting

Many folks in the room wanted a way to tell two things: how much theoretical processing power does the user device have and how much of that power is currently available to me?

The use cases for this vary from debugging user complaints, normalizing performance results, blocking some 3rd party content on low powered devices, or serving content which requires less CPU (e.g. replace video ads with still-image ones).

Currently these use-cases are somewhat tackled by User-Agent string based profiling, but that’s inaccurate and cumbersome.

There are also the obvious tension in such an API as it’s likely to expose a lot of fingerprintable entropy, so whatever we come up with needs to expose the least number of bits possible.

Finally, we managed to form a task force to gather up all the use cases so that we can outline what a solution may look like.

# Single Page App metrics

Many of our metrics are focused around page load: navigation timing, paint timing, first-input timing, largest-contentful-paint and maybe others. There’s no way for developers or frameworks to declare a “soft navigation”, which will reset the clock on those metrics, notify the browser when a new navigation starts, enable by-default buffering of entries related to this soft navigation and also potentially help terminate any in-flight requests that are related to the previous soft navigation.

Analytics providers use a bunch of heuristics to detect soft-navigations, but it’s tricky, fragile and inaccurate. An explicit signal would’ve been significantly better.

During that session we discussed current heuristic methods (e.g. watching pushState usage) and whether they can be a good fit for in-browser heuristics, or if an explicit signal is likely to be more successful.

We also had a side discussion about “component navigations” in apps where each one of the components can have a separate lifecycle.

Finally, we agreed that a dedicated task force should gather up the use-cases that will enable us to discuss a solution in further depth.

# WG Process

Finally, we discussed the WG’s process and few points came up:

- Would be great to have an IM solution to augment the team’s calls.

- Transcripts are useful, but scribing is hard. We should try to improve that process.

- Onboarding guide can be useful to help new folks get up to speed

- Separating issue discussion from design calls helps folks who are not involved in the old bugs’ details. We could also create topic-specific task forces that can have their own dedicated calls.

# Feedback

Personally, I was looking forward to the F2F, and it didn’t disappoint. It felt like a true gathering of the performance community, where browser folks were outnumbered by folks that lead the web performance work in their companies, and work with the group’s APIs on a daily basis.

I was also happy to see that I’m not alone in feeling that the day was extremely productive. One browser vendor representative, told me that the event was full of “great presentations with so much actionable info. I feel like every WG should run such a session”, which was a huge compliment.

Another person, from the industry side of things, for which this was the first WG meeting they attended, said it was “a great conference” and that they “learned a ton”.

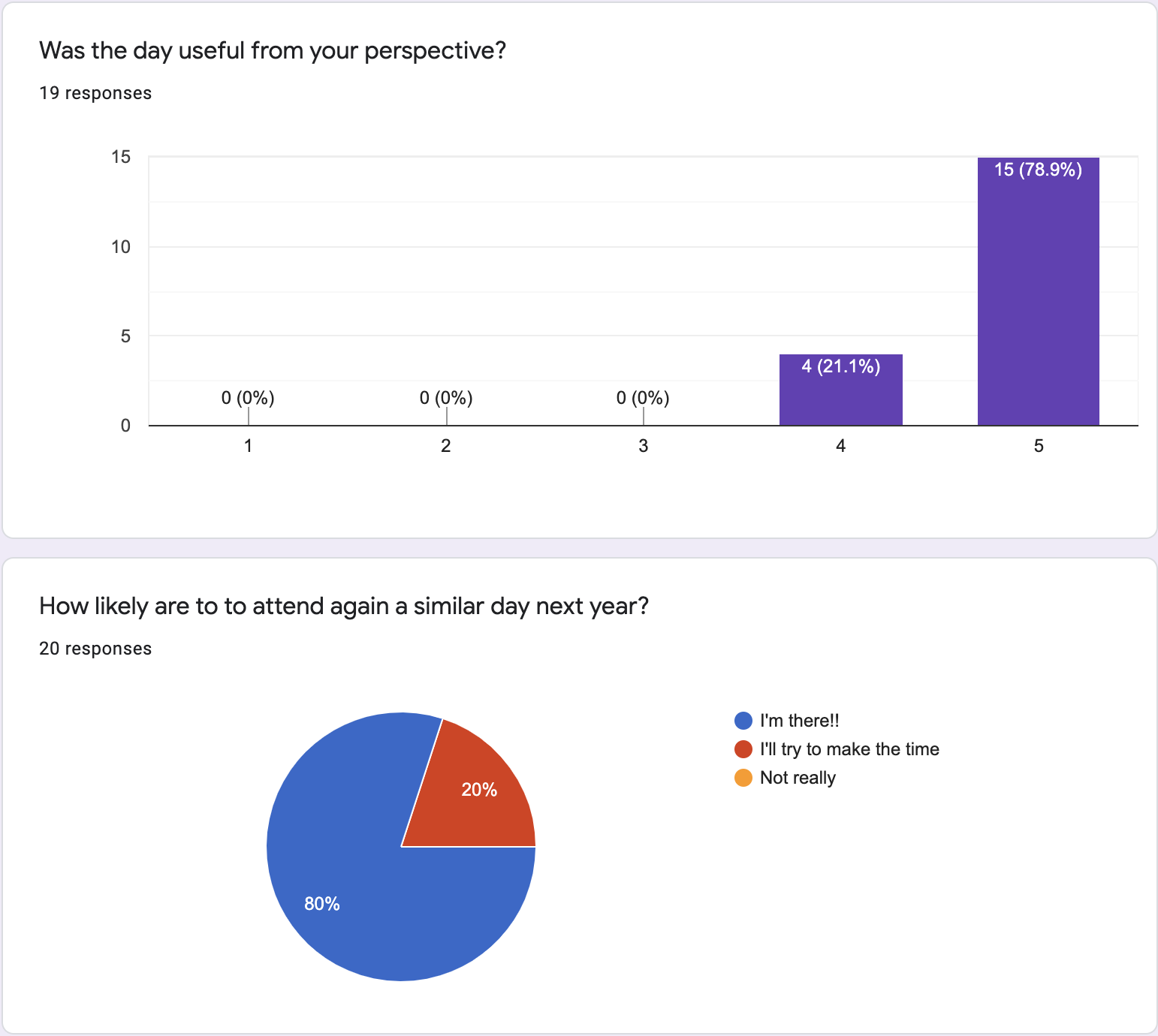

And looking at the post-F2F survey results showed a 4.78 (out of 5) score to the question “was the day useful?”, and that 78.9% of attendees will definitely attend again, while 21.1% will try to make it.

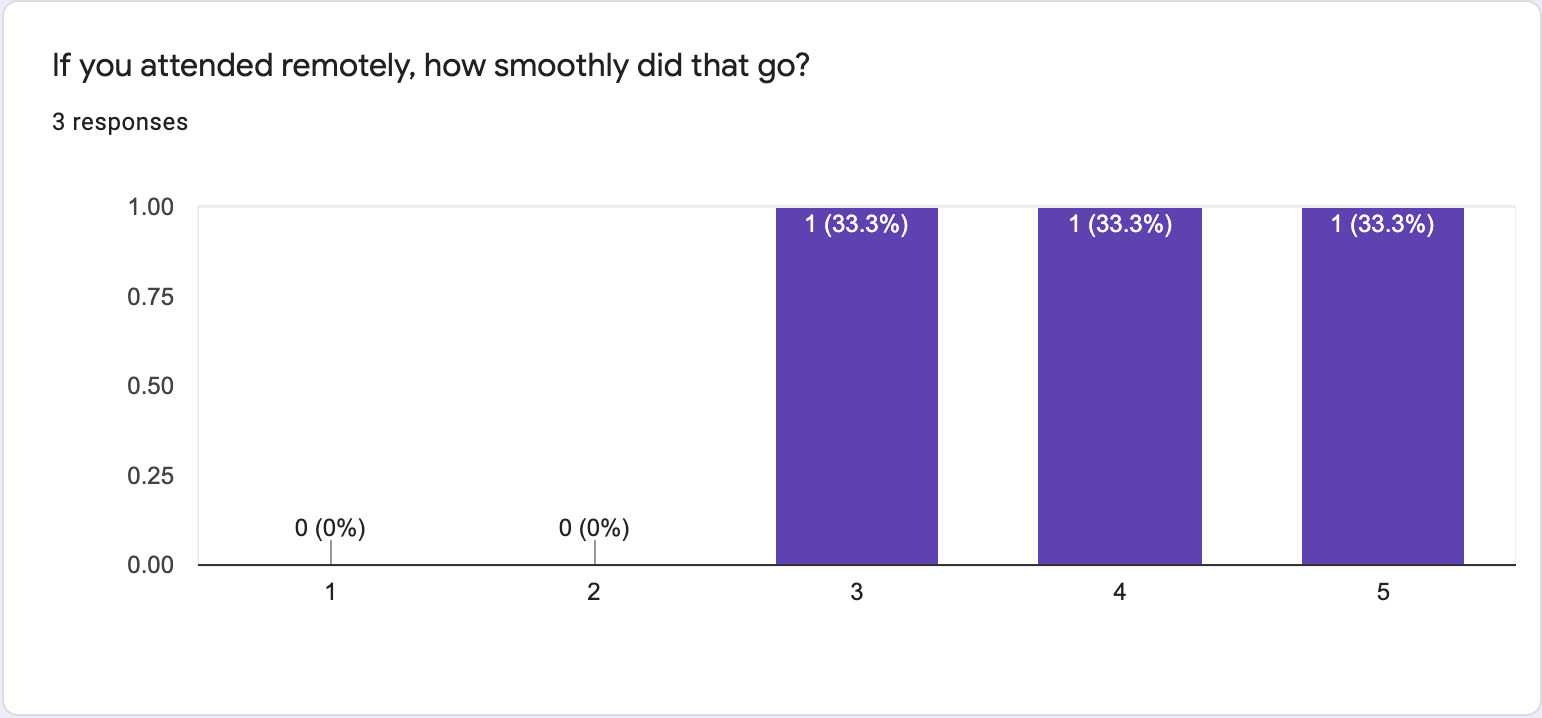

The only downside is that it seems remote attendance wasn’t as smooth as it should’ve been, scoring 4/5 on average. Folks also wished there was a social event following the work day, which we should totally plan for next time.

And yes, given the quality feedback the day had, I think we’ll definitely aim to repeat that day next year.

# Hackathon

The F2F meeting was followed by a full-day hackathon where we managed to close a bunch of WG members in a room and hash out various long standing issues. As a result we had a flurry of activity on the WG’s Github repos:

- Navigation Timing

- Made progress on a significant issue around cross-origin reporting of navigation timing, which resulted in a PR that since landed.

- Otherwise, landed 2 more cleanup PRs, and made some progress on tests.

- Performance Timeline

- Moved supportedEntryTypes to use a registry, cleaned up specifications that relied on the previous definition and backported those changes to L2.

- requestIdleCallback

- Had a fruitful discussion on the one remaining thorny issue that’s blocking the spec from shipping, resulting in a clear path towards resolving it.

- Page Visibility

- 3 PRs that removed the “prerender” value, better defined the behavior when the window is obscured, and improved the cross-references. Once those land, we’d be very close to shipping the spec.

- LongTasks saw 6 closed issues, 3 newly opened ones, 24 comments on 11 more issues, and 4 new cleanup PRs.

- Paint Timing had gotten a couple of cleanup PRs.

# Summary

For me, the main goal of this F2F meeting was to make sure the real-life use cases for performance APIs are clear to everyone working on them, to prevent us going full speed ahead in the wrong direction. I think that goal was achieved.

Beyond that, we made huge progress on subjects that the WG has been noodling on for the last few years: Single-Page-App reporting, CPU reporting and FrameTiming. We’ve discussed them, have a clear path forward, and assigned task forces to further investigate those areas and come back with clear use-case documents. I hope we’d be able to dedicate some WG time to discussing potential designs to resolve those use-cases at TPAC.

Finally, the fact that we were able to see so many new faces in the group was truly encouraging. There’s nothing like having the API’s “customers” in the room to make sure we stay on track and develop solutions that will solve real-life problems, and significantly improve user experience on the web.

Thanks to Addy Osmani, Kris Baxter, and Ilya Grigorik for reviewing!